News

Food for Artificial Intelligence

Overview of training datasets for agricultural computer vision tasks

Author: Florian Kitzler

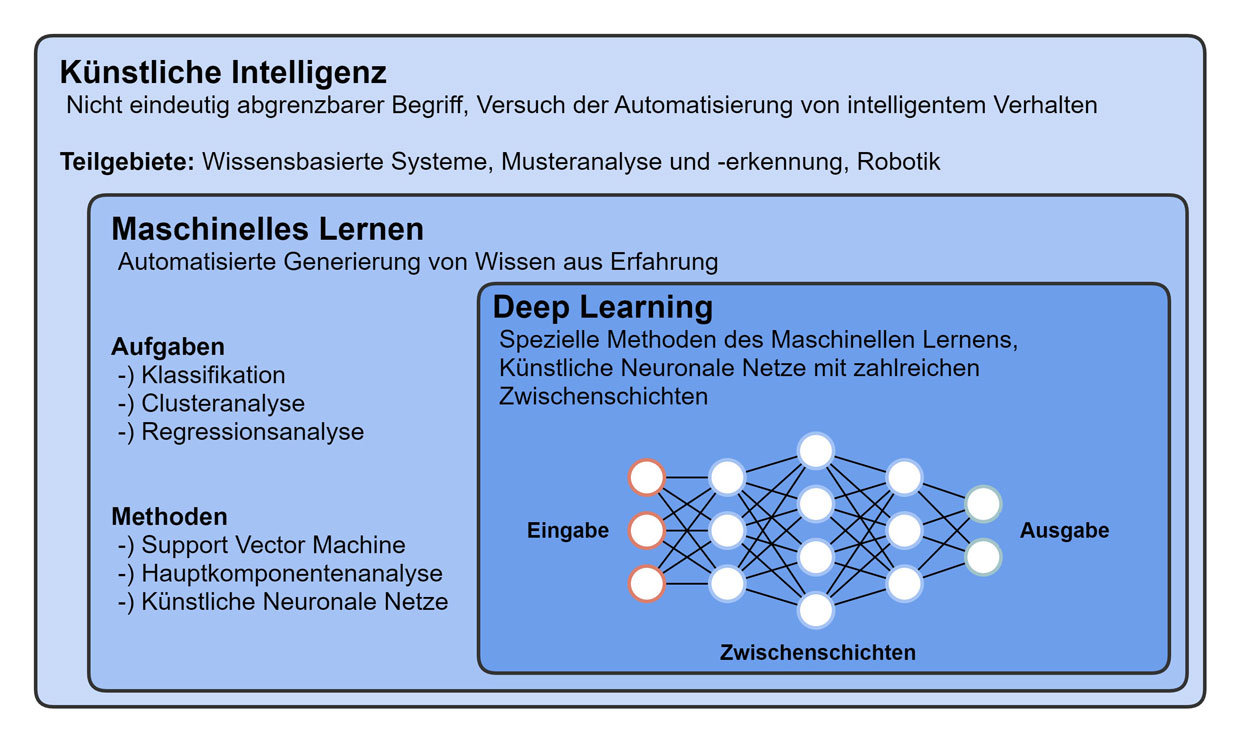

Figure 1: Attempt to define Artificial Intelligence, examples for tasks and methods of Machine

Artificial Neural Networks (ANN) with a high number of hidden layers, so-called Deep Learning (DL) models, are used for the following tasks in the field of computer vision.

- Classification – The whole image is categorized

- Detection – Objects within the image are classified and localized

- Semantic Segmentation – Each pixel within the image is categorized

Because of their high number of layers and thus many parameters, Deep Learning models require vast amounts of training data. The resulting models are non-mechanistic, meaning that a mathematical formula cannot explain them. They can be considered as black-box models with no comprehensible connection between input and output.

Deep Learning tasks in agriculture

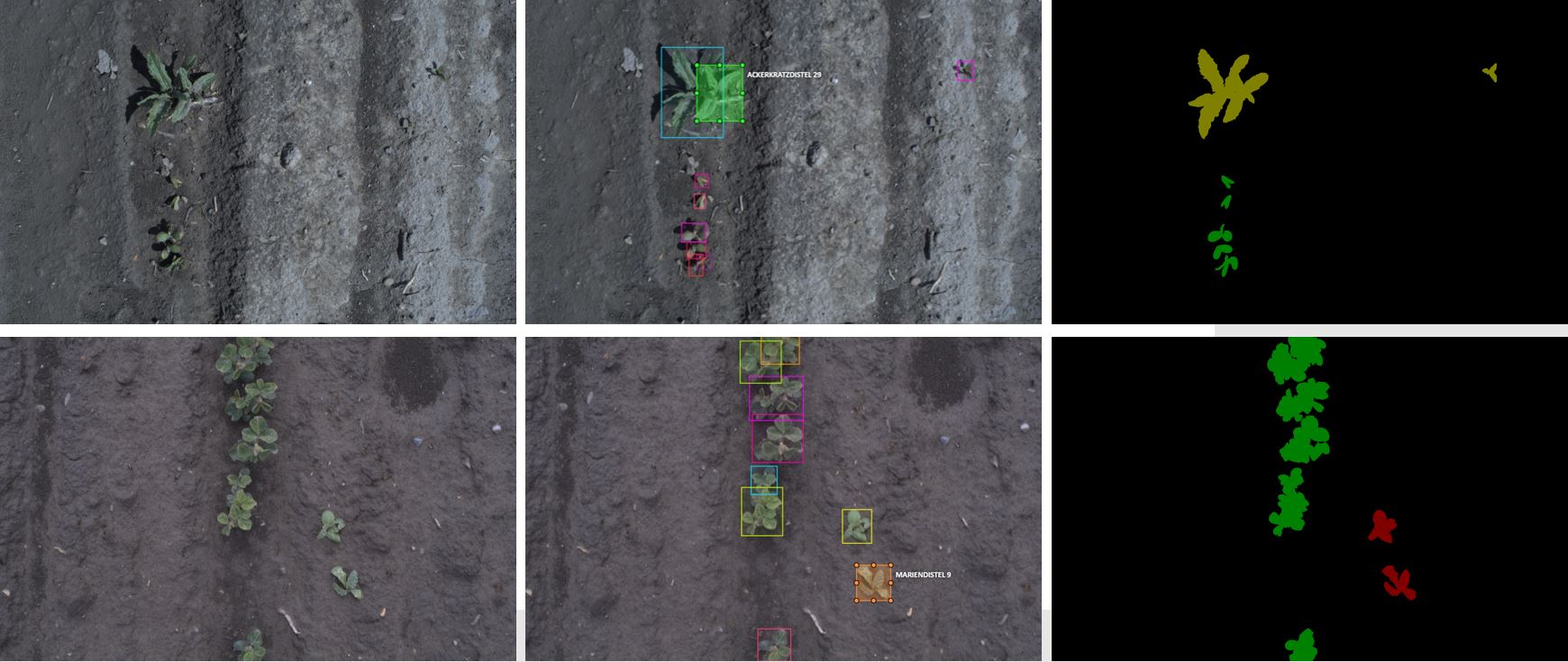

A goal of the project “Integration of plant parameters for intelligent agricultural processes” is to acquire plant parameters from digital images using computer vision methods. Plant species classification will be performed by using Deep Learning models for Semantic Segmentation. In practice, this can be used for weed recognition and decision support for intelligent weed regulation measures. For the training data, images of different plants will be taken and manually annotated. Annotation requirements are dependent on the individual task; in some cases, additional information may be needed for the algorithm to operate correctly. A single label can be used for categorization in classification, whereas in detection, different bounding boxes containing the object are necessary. For the Semantic Segmentation task, each pixel must be assigned to a class. This can be done by drawing polygons around each annotated object in the image. This leads to so-called segmentation masks where a different color represents each class, and the pixel is colored according to their class membership.

Figure 2: Images of soybean (left), bounding box annotation (middle), and segmentation masks (right, green: soybean, yellow: creeping thistle, red: milk thistle, black: soil) for the various growth stages of soybeans (top-down). Used annotation software CVAT [1].

Table 1: Overview of open access training datasets in the field of autonomous driving and precision agriculture.

|

Datenbank |

Jahr |

Klassen |

Bilder |

Annotationstyp |

Anwendung |

|

KITTI [2] |

2013 |

34 |

400 |

Semantische Segmentation |

Autonomes Fahren |

|

Cityscapes [3] |

2016 |

19 |

5000 |

Semantische Segmentation |

Autonomes Fahren |

|

BDD100K [4] |

2020 |

40 |

10000 |

Semantische Segmentation |

Autonomes Fahren |

|

CWFID [5] |

2015 |

2 |

60 |

Semantische Segmentation |

Karotte vs. Unkraut |

|

Sugar-Beet [6] |

2017 |

2 |

10036 |

Semantische Segmentation |

Zuckerrübe vs. Unkraut |

|

DeepWeeds [7] |

2019 |

9 |

17509 |

Klassifikation |

Unkrauterkennung |

|

Agriculture-Vision [8] |

2020 |

9 |

94986 |

Semantische Segmentation |

Feldanomalie |

Citation:

F. Kitzler, „Nahrung für Künstliche Intelligenz: Überblick von Trainingsdaten für die Bildanalyse in der Landwirtschaft“. In: DiLaAg Innovationsplattform [Webblog]. Online-Publikation: https://dilaag.boku.ac.at/innoplattform/en/2021/02/10/food-for-artificial-intelligence/, 2020.

Sources:

| [1] | „OpenCV CVAT,“ 10 07 2020. [Online]. Available: https://github.com/opencv/cvat |

| [2] | A. Geiger, P. Lenz, C. Stiller und R. Urtasun, „Vision meets robotics: The kitti dataset,“ The International Journal of Robotics Research, Bd. 32, p. 1231–1237, 2013. |

| [3] | M. Cordts, M. Omran, S. Ramos, T. Rehfeld, M. Enzweiler, R. Benenson, U. Franke, S. Roth und B. Schiele, „The Cityscapes Dataset for Semantic Urban Scene Understanding,“ in Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016. |

| [4] | F. Yu, H. Chen, X. Wang, W. Xian, Y. Chen, F. Liu, V. Madhavan und T. Darrell, „BDD100K: A diverse driving dataset for heterogeneous multitask learning,“ in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020. |

| [5] | S. Haug und J. Ostermann, „A Crop/Weed Field Image Dataset for the Evaluation of Computer Vision Based Precision Agriculture Tasks,“ in Computer Vision – ECCV 2014 Workshops, Cham, 2015. |

| [6] | N. Chebrolu, P. Lottes, A. Schaefer, W. Winterhalter, W. Burgard und C. Stachniss, „Agricultural robot dataset for plant classification, localization and mapping on sugar beet fields,“ The International Journal of Robotics Research, Bd. 36, p. 1045–1052, 7 2017. |

| [7] | A. Olsen, D. A. Konovalov, B. Philippa, P. Ridd, J. C. Wood, J. Johns, W. Banks, B. Girgenti, O. Kenny, J. Whinney und others, „DeepWeeds: A multiclass weed species image dataset for deep learning,“ Scientific reports, Bd. 9, p. 1–12, 2019. |

| [8] | M. T. Chiu, X. Xu, Y. Wei, Z. Huang, A. G. Schwing, R. Brunner, H. Khachatrian, H. Karapetyan, I. Dozier, G. Rose und others, „Agriculture-vision: A large aerial image database for agricultural pattern analysis,“ in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020. |

| [9] | A. Ruckelshausen, P. Biber, M. Dorna, H. Gremmes, R. Klose, A. Linz, F. Rahe, R. Resch, M. Thiel, D. Trautz und others, „BoniRob–an autonomous field robot platform for individual plant phenotyping,“ Precision agriculture, Bd. 9, p. 1, 2009. |